I am a VP of Research at Google DeepMind. I joined DeepMind in 2014 to pursue new solutions for artificial general intelligence. Currently, I oversee many of DeepMind’s Foundational Research, leading teams that are exploring new innovations in AI to address the open questions that today's methods cannot yet answer.

Before joining DeepMind in early 2014, I had found my way into AI research via an unusual path. After an undergraduate degree in religion and philosophy from Reed College, I veered off-course and became a computer scientist. My PhD with Yann LeCun, at NYU, focused on machine learning using Siamese neural nets (often called a 'triplet loss' today), face recognition algorithms, and on deep learning for mobile robots in the wild. My thesis, 'Learning Long-range vision for offroad robots', was awarded the Outstanding Dissertation award in 2009. I spent a post-doc at CMU Robotics Institute, working with Drew Bagnell and Martial Hebert, and then became a research scientist at SRI International, at the Vision and Robotics group in Princeton, NJ.

After joining DeepMind, then a small 50-person startup that had just been acquired by Google, my research focused on a number of fundamental challenges in AGI, including continual and transfer learning, deep reinforcement learning for robotics and control problems, and neural models of navigation (see publications). I have proposed neural approaches such as policy distillation, progressive nets, and elastic weight consolidation to solve the problem of catastrophic forgetting.

In the broader AI community, I was a founder and Editor-in-Chief of a new open journal, TMLR. I have had the honor of sitting on the executive boards of CoRL and WiML (Women in Machine Learning), am a fellow of the European Lab on Learning Systems (ELLIS), and I was a founding organizer of NAISys (Neuroscience for AI Systems).

My pronouns are she/her

Photos by DeepMind on Unsplash

Before joining DeepMind in early 2014, I had found my way into AI research via an unusual path. After an undergraduate degree in religion and philosophy from Reed College, I veered off-course and became a computer scientist. My PhD with Yann LeCun, at NYU, focused on machine learning using Siamese neural nets (often called a 'triplet loss' today), face recognition algorithms, and on deep learning for mobile robots in the wild. My thesis, 'Learning Long-range vision for offroad robots', was awarded the Outstanding Dissertation award in 2009. I spent a post-doc at CMU Robotics Institute, working with Drew Bagnell and Martial Hebert, and then became a research scientist at SRI International, at the Vision and Robotics group in Princeton, NJ.

After joining DeepMind, then a small 50-person startup that had just been acquired by Google, my research focused on a number of fundamental challenges in AGI, including continual and transfer learning, deep reinforcement learning for robotics and control problems, and neural models of navigation (see publications). I have proposed neural approaches such as policy distillation, progressive nets, and elastic weight consolidation to solve the problem of catastrophic forgetting.

In the broader AI community, I was a founder and Editor-in-Chief of a new open journal, TMLR. I have had the honor of sitting on the executive boards of CoRL and WiML (Women in Machine Learning), am a fellow of the European Lab on Learning Systems (ELLIS), and I was a founding organizer of NAISys (Neuroscience for AI Systems).

My pronouns are she/her

Photos by DeepMind on Unsplash

Latest News

News archive is here

ICLR Keynote, Vienna (May 2024)

|

Title: Learning through AI’s winters and springs: Unexpected truths on the road to AGI

After decades of steady progress and occasional setbacks, the field of AI now finds itself at an inflection point. AI tools have exploded into the mainstream, we're yet to hit the ceiling of scaling dividends, and the community is asking itself what comes next. In this talk, Raia will draw on her 20 years experience as an AI researcher and AI leader to examine how our assumptions about the path to Artificial General Intelligence (AGI) have evolved over time, and to explore the unexpected truths that have emerged along the way. |

Exploring AI Evolution at EmTech MIT

|

|

Keynote speaker at UCL: NeuroAI seminar: "Embodied AGI and The Future of Robotics" (July 2022)

|

Keynote speaker at IEEE ICDL, Queen Mary University: "The transformative power of modern AI methods" (Sep 2022)

|

Panelist for Turing AI World-Leading Researcher Fellowships. You can learn more about the UK's Engineering and Physical Sciences Research Council funding opportunities here.

|

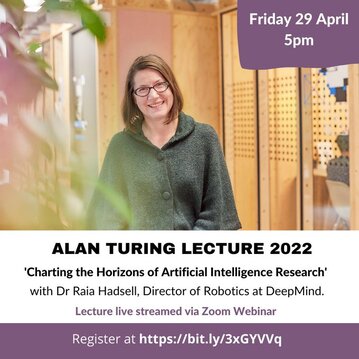

Alan Turing lecture: Charting the Horizons of Artificial Intelligence (April 2022)I made my return to post-pandemic, in-person, proper public speaking on April 29, giving the annual Turing Lecture at King's College, Cambridge University. It was my first opportunity to speak about AI on a big stage in quite a while, and a lot has changed. My talk covered some of the exciting work of the last year and, of course, I did some prognostication about the future of robotics and other AI technologies.

The full video is now available! |

|

December 2021

Hugo Larochelle, Kyunghyun Cho, and I have launched TMLR (Transactions in Machine Learning Research), a new journal to support the publication needs of the AI community. The three of us will be co-Editors-in-Chief. We are currently emailing invitations to join us as Action Editors - please read about the journal and join for the inaugural year if you are able! |

November 2021

The Conference on Robot Learning (CoRL) was hosted in London by myself as General Chair, Sandra Faust as Program Chair, and an amazing organising committee. It was certainly a challenging year to plan a conference - we spent a solid 10 months negotiating the uncertainties of COVID and the unknowns of a hybrid format. In retrospect it went very well, and I'm incredibly happy that we squeaked it in before Omicron roared through London!

Check out the CoRL website for artifacts from the conference: recordings of talks, tutorials, and keynotes as well as published papers in PMLR.

The Conference on Robot Learning (CoRL) was hosted in London by myself as General Chair, Sandra Faust as Program Chair, and an amazing organising committee. It was certainly a challenging year to plan a conference - we spent a solid 10 months negotiating the uncertainties of COVID and the unknowns of a hybrid format. In retrospect it went very well, and I'm incredibly happy that we squeaked it in before Omicron roared through London!

Check out the CoRL website for artifacts from the conference: recordings of talks, tutorials, and keynotes as well as published papers in PMLR.

October 2021

There's an article in IEEE Spectrum, by Tom Chivers, about DeepMind, catastrophic forgetting, robotics, and my research. I think Tom really captured the problem and the research challenge nicely.

Fun fact: Tom interviewed me for this article almost 2 years ago, just before the pandemic broke. IEEE delayed publication until now because of the ensuing COVID disruption. So if the research in the article has a whiff of 2019, that's why!

There's an article in IEEE Spectrum, by Tom Chivers, about DeepMind, catastrophic forgetting, robotics, and my research. I think Tom really captured the problem and the research challenge nicely.

Fun fact: Tom interviewed me for this article almost 2 years ago, just before the pandemic broke. IEEE delayed publication until now because of the ensuing COVID disruption. So if the research in the article has a whiff of 2019, that's why!

1.9.2018

Videos and Podcasts! I'm not sure why, but I've suddenly got a lot of media links to share...

Videos and Podcasts! I'm not sure why, but I've suddenly got a lot of media links to share...

- Women of Silicon Roundabout: this is a 20 minute talk about my background, my navigation research, and a brief discussion of the importance of ethical AI and the dangers of Pink Science!

- BBC4 'The Joy of AI' with Jim Al-Khalili: a 60 minute documentary about the origins of AI and current cutting edge research - I'm only interviewed for about 2 minutes, but hey, it's my first primetime appearance :). This is only available until the end of September. If you're not in the UK, you'll have to VPN.

- GCP Podcast: Google Cloud Platform hosts a fun and insightful podcast and they interviewed me about my Street View navigation research.

23.5.2018

It was thrilling to present a bunch of new research on the giant ICRA stage in Brisbane! Here are the papers I talked about:

Grid cells:

Vector-based navigation using grid-like representations in artificial agents

MERLIN:

Unsupervised Predictive Memory in a Goal-Directed Agent

Navigating in Street View:

Learning to Navigate in Cities without a Map

It was thrilling to present a bunch of new research on the giant ICRA stage in Brisbane! Here are the papers I talked about:

Grid cells:

Vector-based navigation using grid-like representations in artificial agents

MERLIN:

Unsupervised Predictive Memory in a Goal-Directed Agent

Navigating in Street View:

Learning to Navigate in Cities without a Map

10.5.2018

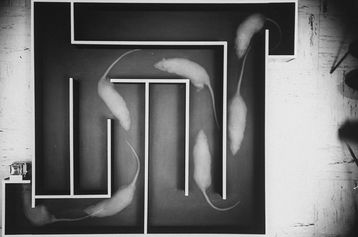

When we started studying navigation at DeepMind in 2015, we weren't looking for grid cells (but that is what we found). Check out our new Nature publication (downloadable pdf here), also a nice article here with comments from Edvard Moser, who along with his partner May-Britt Moser, won a Nobel prize in 2014 for the discovery of grid cells in the rodent brain. This research is important, in part, because it demonstrates how deep neural architectures and end-to-end optimisation can be used to understand and confirm neuroscientists' theories.

Additional press in the guardian, wired, abc, new scientist, financial times, and ars technica.

When we started studying navigation at DeepMind in 2015, we weren't looking for grid cells (but that is what we found). Check out our new Nature publication (downloadable pdf here), also a nice article here with comments from Edvard Moser, who along with his partner May-Britt Moser, won a Nobel prize in 2014 for the discovery of grid cells in the rodent brain. This research is important, in part, because it demonstrates how deep neural architectures and end-to-end optimisation can be used to understand and confirm neuroscientists' theories.

Additional press in the guardian, wired, abc, new scientist, financial times, and ars technica.

17.4.2018

This is an AI agent trained to navigate through Paris using only the visual images available through Street View. This is the first time that deep RL has been demonstrated to work at city-scale for a complex visual task. Please enjoy the arXiv paper and full video!

This is an AI agent trained to navigate through Paris using only the visual images available through Street View. This is the first time that deep RL has been demonstrated to work at city-scale for a complex visual task. Please enjoy the arXiv paper and full video!

Recent Research

|

|

Learning to Navigate in Cities without a Map. Piotr Mirowski, Matthew Koichi Grimes, Mateusz Malinowski, Karl Moritz Hermann, Keith Anderson, Denis Teplyashin, Karen Simonyan, Koray Kavukcuoglu, Andrew Zisserman, Raia Hadsell. Long-range navigation is a complex cognitive task that relies on developing an internal representation of space, grounded by recognisable landmarks and robust visual processing, that can simultaneously support continuous self-localisation ("I am here") and a representation of the goal ("I am going there"). We present an end-to-end deep reinforcement learning approach that can be applied on a city scale, as well as an interactive environment that uses Google Street View for its photographic content and worldwide coverage.

|

Distral: Robust Multitask Reinforcement Learning. Yee Whye Teh, Victor Bapst, Wojciech Marian Czarnecki, John Quan, James Kirkpatrick, Raia Hadsell, Nicolas Heess, Razvan Pascanu. We propose a new approach for joint training of multiple tasks, which we refer to as Distral (Distill & transfer learning). Instead of sharing parameters between the different workers, we propose to share a "distilled" policy that captures common behaviour across tasks. Each worker is trained to solve its own task while constrained to stay close to the shared policy, while the shared policy is trained by distillation to be the centroid of all task policies. We show that our approach supports efficient transfer on complex 3D environments, outperforming several related methods. Moreover, the proposed learning process is more robust and more stable---attributes that are critical in deep reinforcement learning.

Learning to Navigate in Complex Environments. Piotr Mirowski*, Razvan Pascanu*,

Fabio Viola, Hubert Soyer, Andrew J. Ballard, Andrea Banino, Misha Denil, Ross Goroshin, Laurent Sifre, Koray Kavukcuoglu, Dharshan Kumaran, Raia Hadsell. ICLR 2017.

In this work we formulate the navigation question as a reinforcement learning problem and show that data efficiency and task performance can be dramatically improved by relying on additional auxiliary tasks. In particular we consider jointly learning the goal-driven reinforcement learning problem with a self-supervised depth prediction task and a self-supervised loop closure classification task. This approach can learn to navigate from raw sensory input in complicated 3D mazes, approaching human-level performance even under conditions where the goal location changes frequently.

Fabio Viola, Hubert Soyer, Andrew J. Ballard, Andrea Banino, Misha Denil, Ross Goroshin, Laurent Sifre, Koray Kavukcuoglu, Dharshan Kumaran, Raia Hadsell. ICLR 2017.

In this work we formulate the navigation question as a reinforcement learning problem and show that data efficiency and task performance can be dramatically improved by relying on additional auxiliary tasks. In particular we consider jointly learning the goal-driven reinforcement learning problem with a self-supervised depth prediction task and a self-supervised loop closure classification task. This approach can learn to navigate from raw sensory input in complicated 3D mazes, approaching human-level performance even under conditions where the goal location changes frequently.

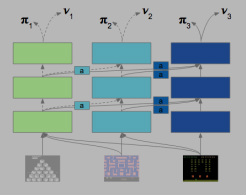

Progressive Neural Networks. Andrei A. Rusu, Neil C. Rabinowitz, Guillaume Desjardins, Hubert Soyer, James Kirkpatrick, Koray Kavukcuoglu, Razvan Pascanu, Raia Hadsell. arXiv, 2016.

Learning to solve complex sequences of tasks--while both leveraging transfer and avoiding catastrophic forgetting--remains a key obstacle to achieving human-level intelligence. The progressive networks approach represents a step forward in this direction: they are immune to forgetting and can leverage prior knowledge via lateral connections to previously learned features.

Learning to solve complex sequences of tasks--while both leveraging transfer and avoiding catastrophic forgetting--remains a key obstacle to achieving human-level intelligence. The progressive networks approach represents a step forward in this direction: they are immune to forgetting and can leverage prior knowledge via lateral connections to previously learned features.

Overcoming Catastrophic Forgetting in Neural Networks. James Kirkpatrick, Razvan Pascanu, Neil Rabinowitz, Joel Veness, Guillaume Desjardins, Andrei A. Rusu, Kieran Milan, John Quan, Tiago Ramalho, Agnieszka Grabska-Barwinska, Demis Hassabis, Claudia Clopath, Dharshan Kumaran, Raia Hadsell, arXiv, 2016.

Is catastrophic forgetting is an inevitable feature of connectionist models? We show that it is possible to overcome this limitation and train networks that can maintain expertise on tasks which they have not experienced for a long time.

Is catastrophic forgetting is an inevitable feature of connectionist models? We show that it is possible to overcome this limitation and train networks that can maintain expertise on tasks which they have not experienced for a long time.

Miscellaneous photos - conferences, london, life.

Impromptu WiML picnic at ICLR 2018 in Vancouver!  A rose-ringed parakeet visiting my back garden.  Zoe dying eggs. We will remember this Easter as the time Oliver (our dog) stole 5 Easter eggs and ate them, shells and all.  On a sailboat in Genoa with robot friends Francesco, Dan, Jonas, and Jon.  It is spring, time to walk!  Beautiful Brisbane at night! Play Pause |